VideoGameBench is a benchmark composed of a diverse suite of 23 curated video games split across a dev and test set, with an environment to evaluate and communicate with VLM-based agents. The task is to solve the core objective of each game, e.g. defeating the final boss in Super Mario Land or completing the entire single-player campaign for Age of Empires.

We collect gameplay scenarios from iconic games spanning multiple genres and decades. Each task requires models to understand visual game states, interpret objectives, and make strategic decisions to progress through levels or achieve specific goals. VideoGameBench challenges models to complete entire games with only raw visual inputs and a high-level description of objectives and controls, a significant departure from existing setups that rely on game-specific scaffolding and auxiliary information.

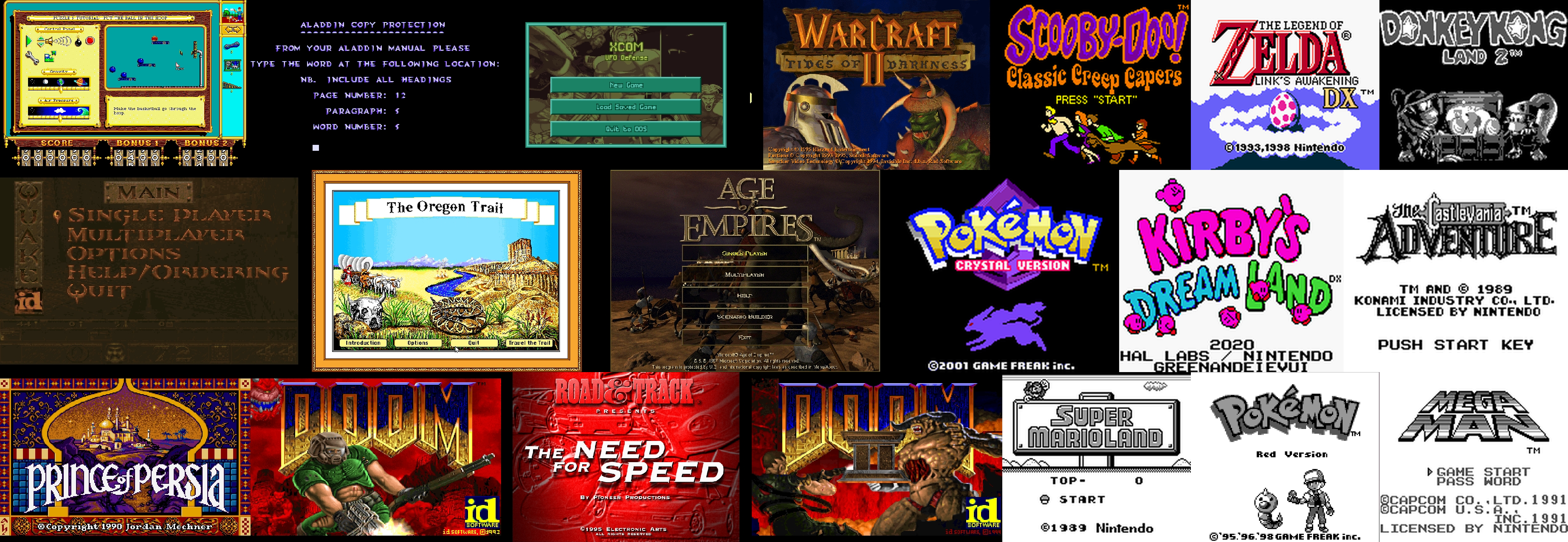

The list of public games on VideoGameBench, spanning 20 different games on MS-DOS and Game Boy consoles.

A huge bottleneck in playing games with VLMs is the high inference time for VLMs to produce an action. We therefore also introduce VideoGameBench Lite, a setting where the game environment pauses while waiting for the model's next action.

VideoGameBench represents a novel approach to evaluating AI systems on tasks that require both visual intelligence and strategic thinking, providing insights into how well current models can understand and interact with complex interactive environments. We previously released a research preview of this benchmark with sample clips here.

| Model | Overall Score | Civilization I | The Need for Speed | The Incredible Machine | Pokemon Crystal | Doom II | Kirby's Dream Land (DX) | Link's Awakening (DX) | Secret Game #1 | Secret Game #2 | Secret Game #3 |

|---|---|---|---|---|---|---|---|---|---|---|---|

VG-Agent + Gemini 2.5 Pro (gemini-2.5-pro-preview-03-25) |

0.48% | 0% | 0% | 0% | 0% | 0% | 4.8% | 0% | 0% | 0% | 0% |

VG-Agent + GPT-4o (gpt-4o-2024-08-06) |

0.09% | 0% | 0% | 0% | 0.9% | 0% | 0% | 0% | 0% | 0% | 0% |

VG-Agent + Sonnet 3.7 (claude-3-7-sonnet-20250219) |

0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% |

VG-Agent + Llama 4 Maverick (llama-4-maverick-17B-128E-Instruct-FP8) |

0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% |

VG-Agent + Gemini 2.0 Flash (gemini-2.0-flash) |

0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% |

Here are some examples of VLM agents playing games on VideoGameBench. These clips demonstrate both the capabilities and current limitations of state-of-the-art vision-language models when faced with real-time gaming challenges.

Example 1. Gemini 2.5 Pro plays Kirby's Dream Land in real-time, successfully navigating through the initial level and reaching the first mini-boss encounter. The agent demonstrates basic platforming abilities and enemy interaction.

Example 2. Gemini 2.5 Pro plays Civilization I in real-time, demonstrating poor strategic planning and resource management. The agent makes suboptimal military decisions, resulting in rapid defeat against Napoleon's forces.

Example 3. Gemini 2.5 Pro explores The Legend of Zelda: Link's Awakening, wandering aimlessly while searching for Link's sword. This demonstrates challenges in objective-oriented navigation and game state understanding.

Example 4. Claude Sonnet 3.7 attempts The Incredible Machine, a puzzle game requiring precise object placement and physics understanding. The agent exhibits difficulties with accurate cursor control and spatial reasoning.

Example 5. GPT-4o plays Pokémon Crystal, successfully selecting Cyndaquil as its starter Pokémon but subsequently losing track of the primary objective. This highlights issues with long-term memory and goal persistence in complex RPG environments.

Example 6. Our VG-Agent (using GPT-4o) plays Doom II (easiest difficulty) on VideoGameBench Lite, where the environment pauses while the agent thinks. The agent demonstrates basic combat abilities and navigation but struggles with complex strategic decisions.

As demonstrated in these examples, current state-of-the-art VLMs exhibit varying degrees of competency across different game genres. While they can perform basic interactions such as movement, menu navigation, and simple combat, they consistently struggle with higher-order cognitive tasks including strategic planning, spatial reasoning, objective maintenance, and adaptive problem-solving. These limitations underscore the substantial gap between current AI capabilities and human-level performance in complex interactive environments.

If you use VideoGameBench in your research, please cite our paper:

@article{zhang2024videogamebench,

title={VideoGameBench: Can Vision-Language Models Complete Popular Video Games?},

author={Zhang, Alex L. and Griffiths, Thomas L. and Narasimhan, Karthik R. and Press, Ofir},

journal={arXiv preprint arXiv:2505.18134},

year={2024}

}